by the PrecisionTox Data Management Working Group

The PrecisionTox project is an international program recently funded by the European Union, which aims to push into new scientific frontiers and advance our understanding of chemical insults on living organisms. To achieve this ambitious goal and embark on this Big Data Science endeavour, a dream team of experts in the field of chemistry, biology, genomics, metabolomics, pathway modeling, and data management has been assembled.

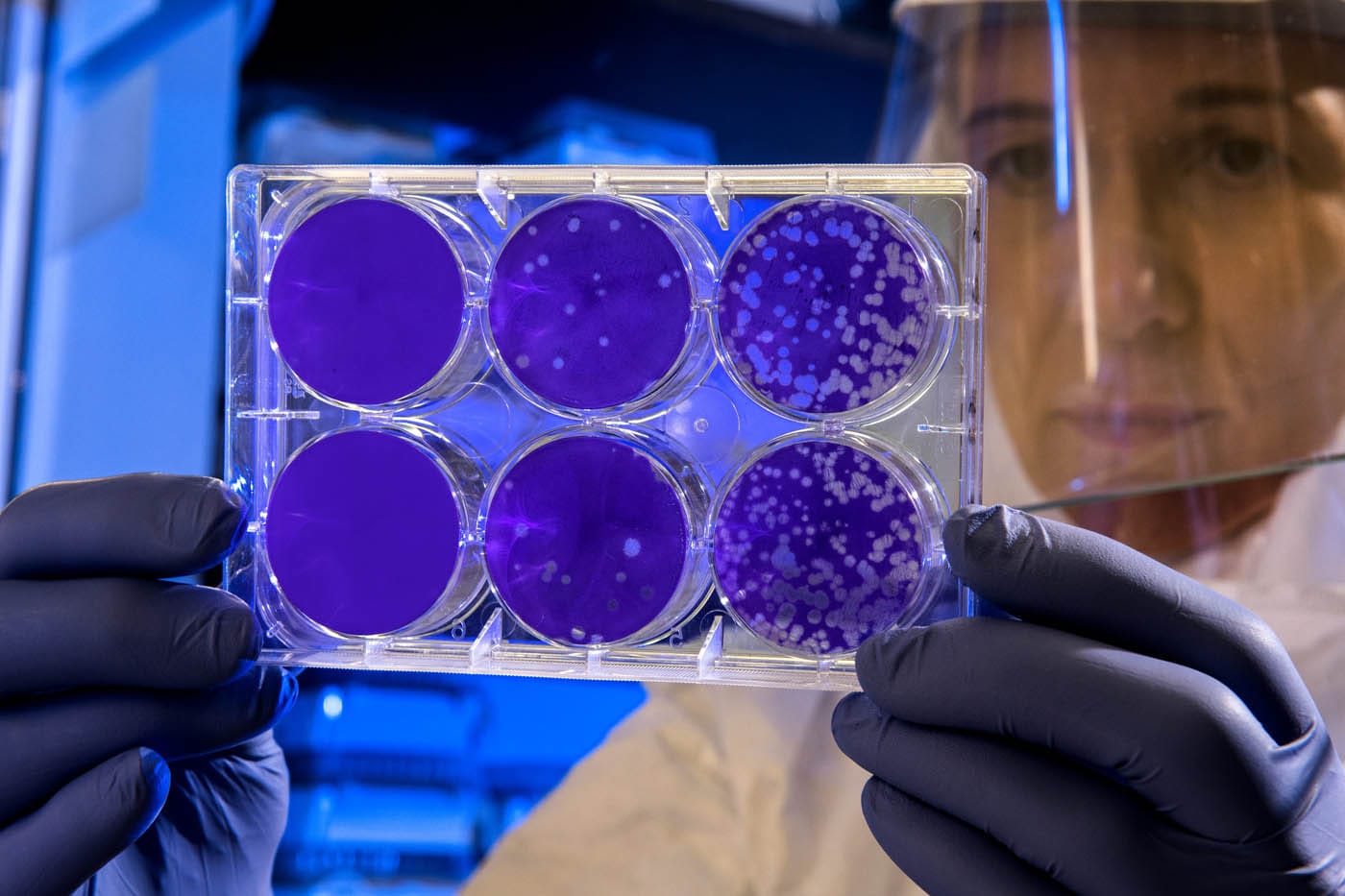

Ultimately, up to 250 chemical compounds, potentially toxic, will be tested in a standardized exposure study designed to shine a new light on how they interfere with the biology of at least five model organisms and human cell lines.

The work will rest on carrying out an extensive array of phenotypic characterisation assays at macroscopic and molecular levels, complemented by a genetic analysis to discover determinants of resilience or sensitivity to chemical exposure. High throughput imaging techniques, metabolomics and transcriptomics as well as classic phenotyping techniques will be harnessed to build a truly unique picture of chemical effects in alternative model organisms for toxicity study — organisms classified as non-sentients. Once obtained, mathematicians, modelers and data miners will exploit the dataset to develop a new generation of tools capable of hinting at potentially adverse effects in new molecules, thus possibly deliver new tools to help predict toxicity of xenobiotics, with the added benefit of possibly reducing the need to resort to animal testing.

Yet, to enable the analytical work to be carried out, it is central to the PrecisionTox project that the dataset be coherent and consistent. Therefore, a significant standardization effort is under way to ensure high data quality is achieved but also that high quality metadata about those experiments is recorded to deliver trustworthy data to the signal processing teams.

To this end, a data management working group is assembled to “front load” standardization efforts in all aspects of the project. It works to deliver “FAIR by design” data production and handling practices. This means that, from the get-go, PrecisionTox is in a position to deliver standardized data, relying on open science data format (such as mzML and ISA for metabolomics dataset or Frictionless Data Package for reporting analytical results), community agreed terminologies (i.e. a subset OBOfoundry ontologies used by model organism databases), identifier minting services, metadata template generation and provenance tracking.

All the methods outlined in the recently released data management plan are currently being implemented, trialed and refined in the initial pilot study, where dose ranging experiments are carried out and high throughput molecular profiling procedures are being tested. A continuous process of evaluation and improvements of the standard operating procedures will aim to streamline all data production, integrity checking and quality control, as well as strive to minimize the burden on human operators by taking advantage of modern computational practices which allow to apply to data management all the lessons learned from good software engineering practice in terms of continuous integration, testing and consistency checking.